Abstract

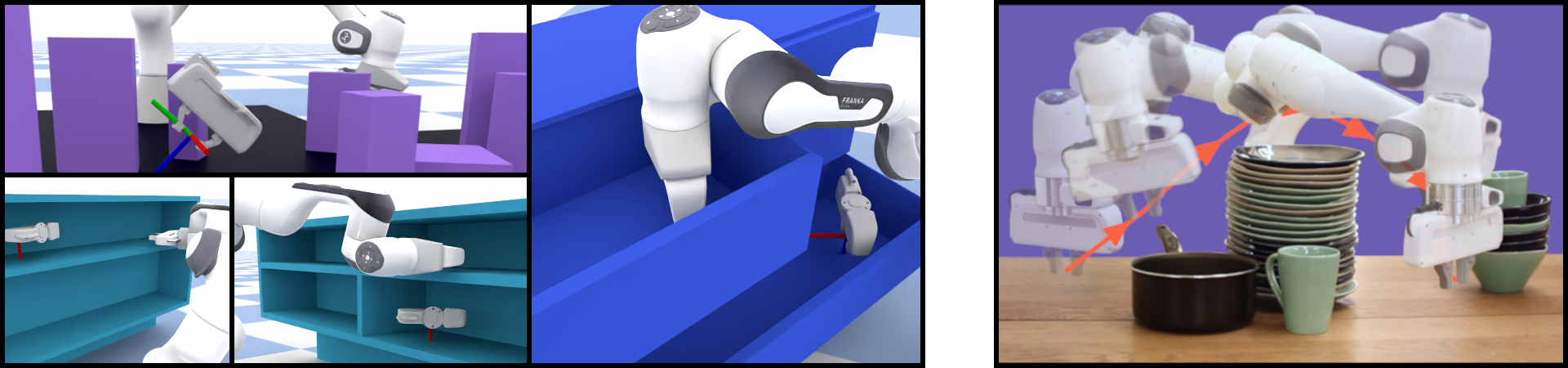

Collision-free motion generation in unknown environments is a core building block for robot manipulation. Generating such motions is challenging due to multiple objectives; not only should the solutions be optimal, the motion generator itself must be fast enough for real-time performance and reliable enough for practical deployment. A wide variety of methods have been proposed ranging from local controllers to global planners, often being combined to offset their shortcomings. We present an end-to-end neural model called Motion Policy Networks (MπNets) to generate collision-free, smooth motion from just a single depth camera observation. MπNets are trained on over 3 million motion planning problems in over 500,000 environments. Our experiments show that MπNets are significantly faster than global planners while exhibiting the reactivity needed to deal with dynamic scenes. They are 46% better than prior neural planners and more robust than local control policies. Despite being only trained in simulation, MπNets transfer well to the real robot with noisy partial point clouds.

Acknowledgements

We would like to thank the many people who have assisted in this research. In particular, we would like to thank Mike Skolones for supporting this research at NVIDIA, Balakumar Sundaralingam and Karl Van Wyk for their help in evaluating MπNets and benchmarking it against STORM and Geometric Fabrics respectively; Ankur Handa, Chris Xie, Arsalan Mousavian, Daniel Gordon, and Aaron Walsman for their ideas on network architecture, 3D machine learning, and training; Nathan Ratliff and Chris Paxton for their help in shaping the idea early-on; Aditya Vamsikrishna, Rosario Scalise, Brian Hou, and Shohin Mukherjee for their help in exploring ideas for the expert pipeline, Jonathan Tremblay for his visualization expertise, Yu-Wei Chao and Yuxiang Yang for their help with using the Pybullet simulator, and Jennifer Mayer for editing the final paper.